The perceptron was one of the earliest models of artificial intelligence, developed by Frank Rosenblatt in 1957. It was designed to mimic how neurons in the human brain work, learning from data and making decisions. The perceptron could classify simple patterns, such as distinguishing between shapes or letters, using a single layer of artificial neurons. However, scientists soon realized its limitation. The perceptron could only solve linearly separable problems simple cases where data can be divided by a straight line. It couldn’t handle more complex problems that required recognizing curves or deeper relationships, such as the XOR problem. Researchers tried adding more layers to make it “think” more deeply, but they lacked the mathematical tools and computing power to train those layers effectively. This limitation led to the temporary decline of AI research in the 1970s, known as the “AI winter.”Later, with advancements in computing power and the development of backpropagation algorithms in the 1980s, scientists were finally able to train multi-layer perceptrons paving the way for modern neural networks and deep learning.

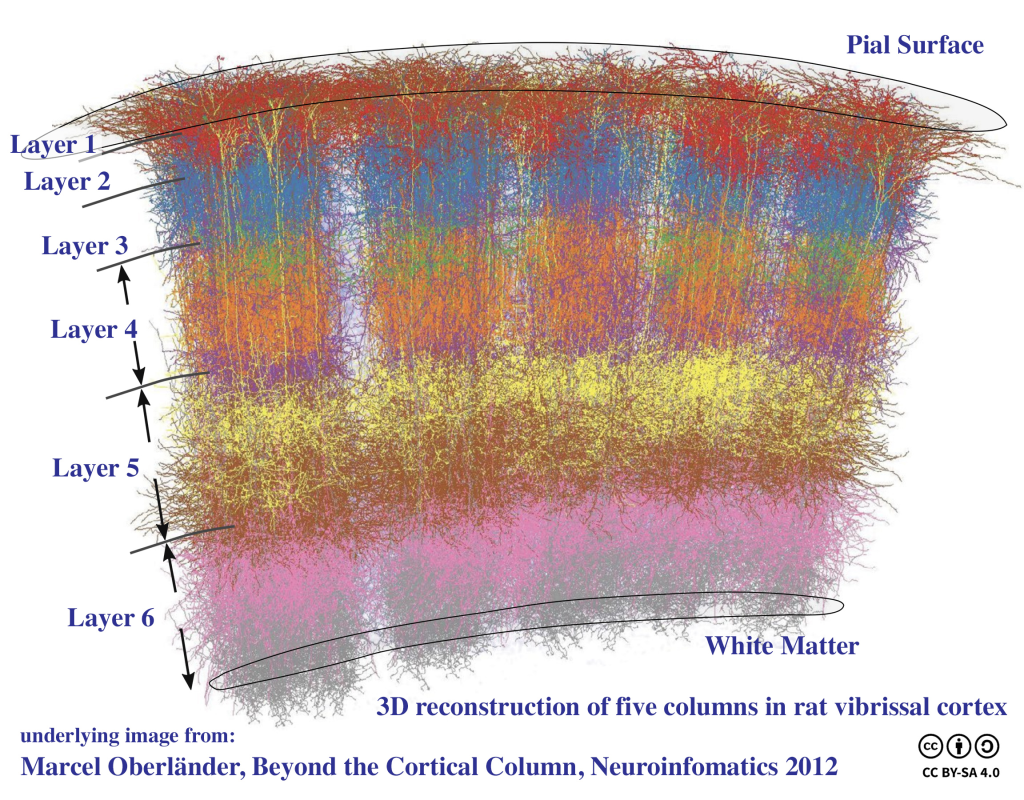

3D reconstruction of five cortical columns in rat vibrissal cortex

The Perceptron: The First Neural Network

The Perceptron was invented in 1958 by Frank Rosenblatt, an American psychologist and computer scientist at Cornell University. It was the first artificial neural network model, designed to mimic how human neurons process information.Rosenblatt’s Perceptron could learn simple patterns and was considered a significant breakthrough in early artificial intelligence (AI). However, in 1969, Marvin Minsky and Seymour Papert published a book showing that perceptrons couldn’t solve complex problems like the XOR function. This criticism led to a prolonged “AI winter,” during which research and funding slowed significantly.The concept later returned stronger in the 1980s with multi-layer neural networks and backpropagation, forming the foundation of modern deep learning.

The Problem: Why Early Scientists Couldn’t Build Multiple Layers

When Frank Rosenblatt created the Perceptron (1958), it was a single-layer neural network that could only separate data that was linearly separable (like a straight-line boundary). scientists could not develop 2 to 5 layers like human neurons in a computer system. Scientists knew that adding more layers could make it more powerful… but here’s why they couldn’t do it back then:

| Reason | Explanation |

|---|---|

| No training method | They didn’t know how to adjust the weights in the hidden layers. The famous “backpropagation” algorithm (which trains multi-layer networks) wasn’t discovered until the 1980s. |

| Limited computing power | Computers in the 1960s were too slow and weak to train multi-layer models. Even simple perceptrons took hours or days to process small datasets. |

| Mathematical limits | In 1969, Minsky & Papert proved that single-layer perceptrons couldn’t solve problems like XOR, showing the model’s logical limitation — and that killed funding for years. |

| Loss of interest | After their criticism, investors and universities thought neural networks were a dead end — leading to the “AI winter” of the 1970s. |

The Comeback

In the 1980s, researchers like Geoffrey Hinton, David Rumelhart, and Ronald Williams rediscovered backpropagation, allowing computers to train multiple layers this was the birth of modern deep learning.

Full history timeline

| Year | Event | Significance |

|---|---|---|

| 1958 | Frank Rosenblatt invents the Perceptron | The first artificial neuron model; could learn linearly separable patterns. |

| 1960s | Perceptron hype begins | Scientists believed it could lead to human-like intelligence; widely publicized. |

| 1969 | Minsky & Papert publish Perceptrons | Showed single-layer perceptrons could not solve problems like XOR, revealing limitations. |

| 1970s | AI Winter | Research slowed; neural networks were largely abandoned due to lack of progress and computing power. |

| 1986 | Backpropagation rediscovered (Rumelhart, Hinton, Williams) | Enabled training of multi-layer networks, leading to multi-layer perceptrons. |

| 1990s–2000s | Better computers and data | Neural networks gradually improved, applied to handwriting and speech recognition. |

| 2010s–Present | Deep Learning revolution | Modern GPUs, large datasets, and multi-layer networks enabled advanced AI applications like ChatGPT, image recognition, and self-driving cars. |